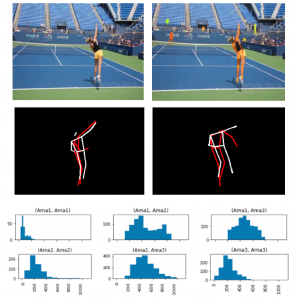

It is no secret that the use of advanced analytics has boomed in sports. As athletes are pushing the human limits every year, they are looking at every tool that could help optimize their training. With the recent progress in computer vision and machine learning, it is only natural that they find their way in professional sports and raise new questions.One of these being: what if we could use computer vision to analyze an athlete’s posture, and provide some insight in order to help said athlete perfect their craft? Well, that is exactly the question that our head of AI Yoshi’s ongoing research project aims to explore with tennis players:

Serve in tennis is a very important phase of the game, in which athletes perform a series of movements carefully perfected over hours and hours of training. Being able to analyze the body’s posture is an extremely valuable asset in the player’s quest for improvement, be it amateur or professional. Which led to wonder, what other sports could benefit from such an approach?As an archery enthusiast, it did not take long for Yoshi to figure out the answer and propose to bring together his interest in the sport and Tokyo Techies’ experience in computer vision.

The ancient art of the bow is a perfect candidate for this in its modern form. The minutely crafted posture of the shooter is an essential aspect of the sport. It also presents a few practical advantages over more mobile sports on the technical side, especially pose estimation. Firstly, the athlete’s lower body is mostly static when executing the movements, which simplifies a lot the tracking and normalization, but also data gathering. Indeed, fixed cameras are enough to capture the necessary input video, rather than moving ones. Secondly, the different phases in the shooting process are pretty well defined, making a systematic approach meaningful and likely to produce useful results.We at TT were very excited about this idea and decided to test it out. Before anything though, it is essential to understand the sport, and since not all of us were familiar with it, what better way to start the project than with a bit of hands-on practice?

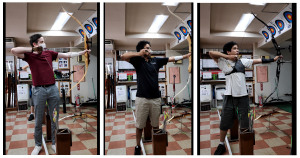

Along with Yoshi came UJ (COO) and myself (Head of AI Solution) on this trip to the shooting range. It was the first archery experience for both UJ and I, but we got immediately hooked! There is something thrilling about bow shooting that combines a clear and peaceful state of mind with an extremely fun stress reliever. After equipping finger taps and armbands to protect our precious finger and arms from the bowstring, we were ready to grab a bow and take on the challenge. On the surface, the process is quite simple: position yourself, pick up, and “nock” an arrow (place it against the bowstring), draw, aim, and finally release. Hopefully, the arrow should end up somewhere near the target. In practice, it is obviously more difficult than it seems, but here is a few things we learned during this short session:

- Generally, the posture should be straight and vertical in the shoulder-hips-feet axis. With the horizontal axis of the arms, it is referred to as the “T” pose.

- A good posture is a stable one, it should be comfortable and induce as little movement as possible. An example is in the knees, who should not be “locked” but keep straight with no bend.

- Slightly putting weight on the front feet helps to keep balance.

- When a stable posture is achieved, the sight pin helping the aim has a slow back and forth movement. When unstable, the movement is more erratic and it becomes harder to aim.

Even simply hitting the target was hard at first, but the progression was fast and very rewarding! Besides familiarizing ourselves with the sport, our arrow shooting session had a second goal: data gathering. We set two smartphones up on tripods to record ourselves: one to capture our body, and the second one recording through a lens aimed at the target.

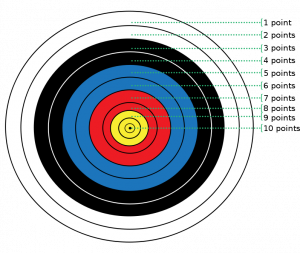

Doing that, we could register the shooter’s score corresponding to their pose, and hopefully, use it as an evaluation metric. The scoring system in archery is thankfully rather simple:

- the center disk is 10 points

- every outer ring from the center has a value decreased by 1 (so the yellow ring around the center disk is 9 points, then 8, etc. all the way to the last white ring with a value of 1 point)

- an arrow touching a line counts for the inner ring corresponding to that line

But since an image is worth more than a thousand words, here is the point distribution on the target:

With this initial data gathering and introductory session to archery done, it was time to get back to the office and move on to the next steps. One of which was to synchronize the shooting videos with the target ones. Indeed, associating the correct score (hit on the target) with the correct pose will be quite useful down the line. To that end, we were able to use the sound from the videos: both sets were recorded with an audio track, and the recording is done in the same environment, they have roughly recorded the same sounds at the same time. Therefore if we are able to align the audio tracks, we are able to align the videos as well!But first of all, a quick sanity check: the speed of sound in the air is about 343 m/s at 20°C in normal atmospheric conditions. This is about 15ms to cover 5m, which is less than the 17ms frame duration of a video with a 60fps frame rate. So if we guarantee these conditions, the synchronization should be accurate down to a frame. Well in our case the cameras were less than 2m apart and the frame rate of the videos was 30fps, meaning sound propagation should not be an issue in our synchronization.The actual synchronization process relies on the extraction and alignment of peak frequencies of our audio tracks. The approach is described in this article.

And this is it for this time! The next steps include automating the process of extracting single shots from the videos, settling on our pose estimation algorithm to track the shooter’s posture, and of course, getting more data.